Since leaving St John’s, Dr Shruti Badhwar (2009) has co-founded Embody, a tech start-up in San Francisco that marries acoustics and data science to create a personalised audio profile. Find out what this means for the future of listening by reading the interview below.

Hello Shruti! Briefly summarise the aims of Embody. How is this technology different from that made by other audio companies?

Embody is an audio technology company that delivers audio tailored to each customer’s ear shape. The shape of our ears determines how we hear sounds, and Embody’s personalised profiles allow users to experience audio as they would in real life (ie in 3D), creating a fully immersive experience for the user, whether they are listening to music, playing or watching a game, or streaming an event.

There have been a few companies in the past who have attempted to bring 3D audio technology to the market, but we are different from these in a several key ways.

First, we are device agnostic, which means we can work with different operating systems.

Second, the sound produced by our personal auditory profile results in a much more natural sound for users than that produced by other software.

Finally, our speed is in the order of seconds, not hours or days.

How did you come up with the idea?

I had worked on the topic of human-machine interaction at IBM. It seemed to me that it was much more natural for users to have smarter experiences with their ears as opposed to their eyes. After one such conversation at a conference, I was introduced to my co-founder, Kapil Jain. That is when I learnt that every person’s hearing is unique.

While personalisation of audio had been looked at by academics for years, it still remained an issue in the real world as it required acoustic measurements or a 3D scan of the end consumer (ie the listener). Kapil and I realised that data science could bridge this gap between academia and the real world, and that’s when we decided to combine forces.

What are your team and working environment like?

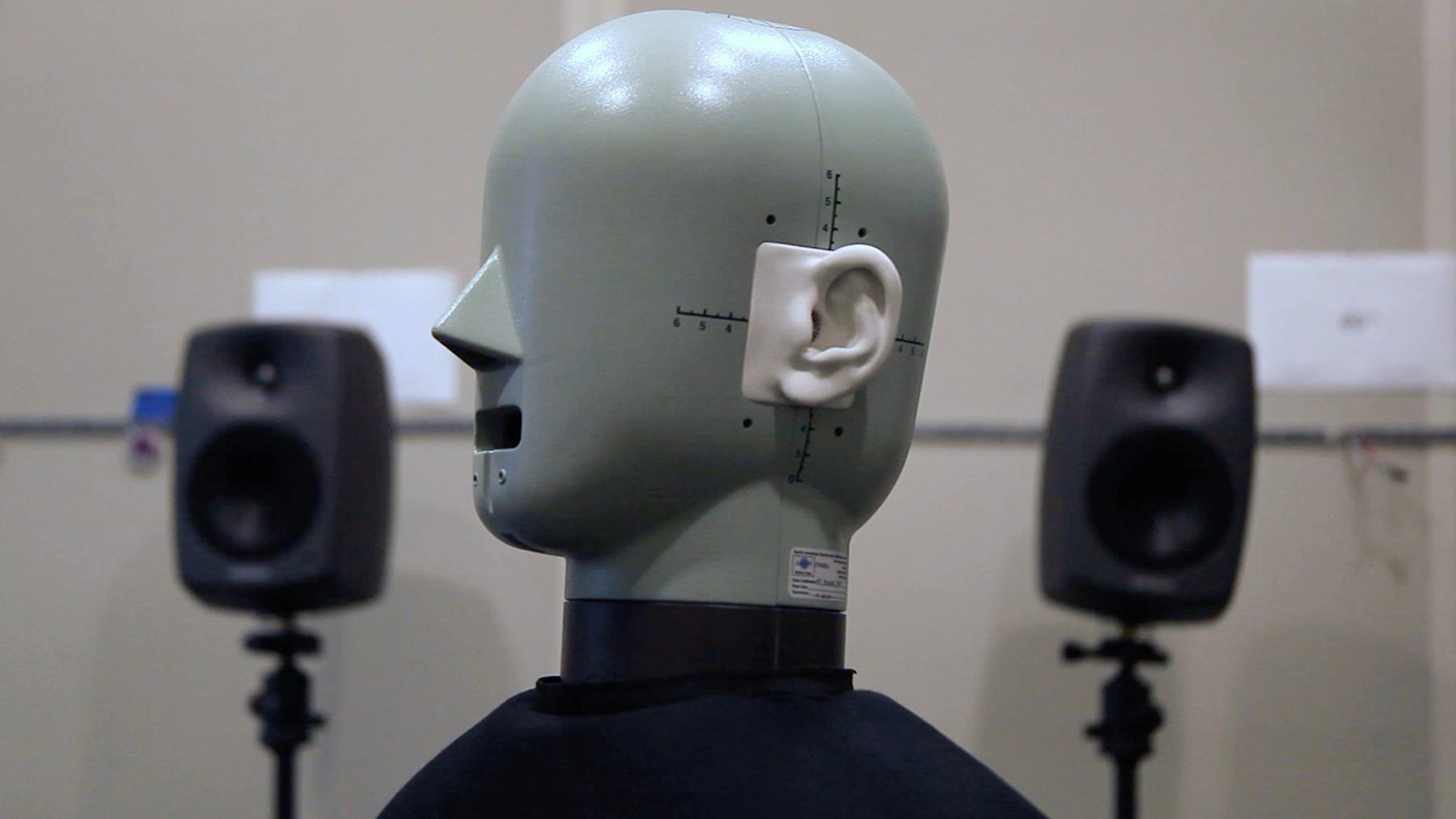

At the moment, we have fourteen full-time employees, three interns, Heeshee (our Kemar head) and Bubbles (our dog) on the team. Coming from a range of engineering and creative backgrounds, we tend to complement each other and work collaboratively. Every so often we get together for a music jam session, play ping-pong and have long discussions on food! As we grow our customers, we will be looking to expand our technical and business team.

Your scientists are able to create a ‘personal audio profile’ based on a single image of anyone’s right ear. How do you actually do this?

Our ability to localise sound depends upon how the sound interacts with the folds and shape of our ears, which are a unique signature of every person. The relationship between the ear and the personal audio profile is too complicated to be modelled analytically, so we use data and machine learning to understand this relationship. The ground truth for our machine-learning models is based on the acoustic data measured in the lab.

Heeshee helps us calibrate our measurement by providing a known response to a sound impulse. With just one ear, we are able to achieve a close degree of accuracy to make the user experience as frictionless as possible.

What’s the difference between the sound you hear with a generic audio profile and a personalised profile?

With a generic audio profile, the auditory experience lacks richness and is quite muddy at high frequencies. With a personalised audio profile, the audio will sound crisper and cleaner. The user will also experience better localisation, especially for discerning front to back or up and down movement of the sound source, mimicking the 3D audio experience we have in real life.

Once you have built an audio profile for someone, how do they then use this?

We are launching our technology in partnership with a few headset companies this year. These headsets come with software that is powered by ImmerseTM, our flagship product for personalised spatial audio. Our next product DiveTM is currently in beta stage and will allow users to stream personalised content on any pair of headphones or devices such as phones, tablets or computers.

Who are your main customers?

Embody is a business-to-business company and enterprises are our main target audience. We are also building partnerships with content creation companies, for both gaming and music.

We have had a lot of interest from sound designers who want to use our personalised 3D spatial audio technology (SpatialMixTM) to expand their stories into new dimensions, and we are now looking to collaborate with content distributors who would be interested in using DiveTM to deliver immersive streaming experiences to their audience.

It can be hard for start-ups to get off the ground. How do you fund Embody and how do you see the company expanding?

We have been very fortunate to have support from quite a few early stage investors who share the vision of Embody. We are currently working on the next round of venture funding.

Our aim is to grow into an audio technology company that impacts the lives of people globally. We want people to hear better, smarter and faster. As we raise more funds, our goal is to grow geographically, expand into multiple business verticals and invest into research and development of new technologies.

What will the constantly-changing world of audio look like in five to ten years and how are you preparing for this?

In the next five to ten years, we will see the world of audio being disrupted by AI and 5G technologies. Our current technology is designed to be experienced on any device, anywhere, and our aim is to use AI to deliver directional and immersive audio that is aware of the user’s environment.

Currently, the maximum number of audio channels that can be transmitted wirelessly is limited to sixteen, even though the original audio was recorded with eight times more spatial resolution. With 5G connectivity, a much higher bandwidth will be available for content sharing, leading to a much better streaming experience.

Our goal is to be one of the leading technology providers when this happens.

Explore Shruti’s company Embody.